Data Structures

Theory - Data Structure Complexity

You probably know that Arrays and LinkedLists take more time to read from than HashMaps and Sets. I used to justify this to myself reasoning that they must be slower because they store more information - order versus no order! Then I asked myself "how much" information they store, and went down this rabbithole. This blog is about my discoveries about complexity and information as I looked into this.

These ideas are all very simple, but I can't find anything about them online - maybe I just don't know the jargon to search for, or maybe I'm rediscovering something already known but in a different light. If you're a complexity theorist I'd love to hear what you think of this blog in the HN comments.

Arrays and LinkedLists have a 1st element, a 2nd element, etc, so they're storing a lot more information than data structures like Sets and HashMaps (where elements have no order). As a result, you need to pay the O(n) cost of maintaining that order by the next time you read from the ordered data structure, or else it will be inaccurate when you read from it!

The takeaway is that if you want to store elements in order, you need to either have an O(n) read or an O(n) write (or both).

We can generalize this idea to all data structures!

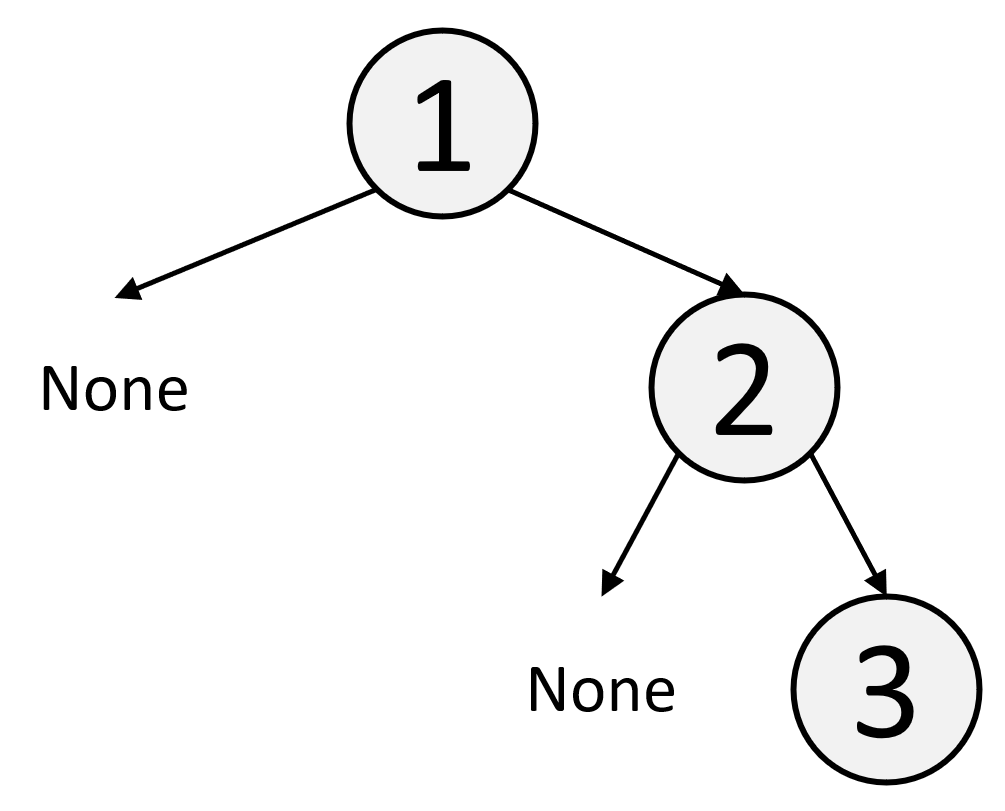

First of all, why do ordered data structures have an O(n) maintenance cost? Well, maintenance cost is just the cost of keeping your data structure updated after you edit, which is really just asking: how many elements might you mess up by making a change - and need to fix? In other words, what are the number of "affectancies" (opposite of dependencies) of the element you're modifying? For ordered/indexed data structures, the number of elements you might affect by editing index i is n-i (all elements to the right because of shifting), which is O(n) if we take an average.

Once you edit your data structure in any way, you must maintain it by the next time you read from it. We can formalize this:

The number of affectancies is set by what kind of information you're storing, like whether you're storing order (Array/LinkedList) which gives O(n), or whether just storing value (HashMap/Set) which gives O(1), or whether you're storing prefix information (Tries), etc. I like to call this number ϕ.

Putting this all together, for any data structure, we have a bound for its read and write times for one element, based on the information the data structure is storing which is encoded in ϕ!

I'm also just writing "read" instead of "next read" from now on - the meaning will be obvious in context below anyway.

Per-Element Theorem

ϕ is not only the # affectancies; it's also the average optimal maintenance cost per element. This means ϕ can be deduced from the optimal runtime that it takes to build a data structure that lets us lookup info per element.

In other words, ϕ is the per element cost:

Ordered Data Structures

For ordered data structures, you can produce different ϕ values by changing what the data structure allows us to look up.

Here, i means data structures that let us look up an element's index, and i 1 means a limited version of this where we can only look up one, two, or O(1) elements by index.

Above, we already showed that i (index lookups) imply maintenance cost of ϕ=n. It's easy to deduce that i 1 means ϕ=1. Here are the consequences:

i

Array:

O(1) read i

O(n) write i

LinkedList:

O(n) read i

O(n) write i

O(1) write i once we read i

Lazy Array * :

O(n⋅w) read i *

O(1) write i

i 1

Stack:

O(1) read i=n

O(1) write i=n

Queue (Doubly Linked):

O(1) read i=0,n

O(1) write i=0,n

Specific Index Array * :

O(1) read i=L

O(1) write i=L

Unordered Data Structures

Unordered data structures are simple because they all have O(1) maintenance cost by definition.

We use v to denote data structures that only store value. There is no point of having v 1 because the cost of v is already ϕ=1.

v

Set:

O(1) read v

O(1) write v

HashMap:

O(1) read v

O(1) write v

Sorted Data Structures

For sorted data structures, you can produce different ϕ values by changing what the data structure allows us to look up.

Here, k means data structures where you can lookup any elements by rank (by index as if we sorted the elements), like the 1st smallest element, 2nd smallest, etc. And k 1 is a limited case of this where you can only look up one, two, or O(1) of the elements by their rank.

It is hard to determine ϕ for k and k 1 - how many ranks does adding or removing a value change (it depends!), but sorting lower bounds work here. By reasoning that sorting takes nlogn and determines k information, we can deduce the per-element maintenance is just nnlogn, so ϕ=logn.

Although k and k 1 have the same ϕ=logn, the k 1 is cheaper in some unexpected ways, like only having an O(n) build step which is lower than the typical (number of elements) * (cost per element) = nϕ.

k

BST:

O(logn) read k

O(logn) add/remove

O(nlogn) build *

k 1

Heap:

O(1) read k=0

O(logn) add/remove *

O(n) build, notably not O(n log n).

Lookup Median * :

O(1) read k=n/2

O(logn) add/remove

O(n) build

Lookup fixed k=L * :

O(1) read k=L

O(logn) add/remove

O(n) build

Intermission: Sliding Window Problems

Before moving on to more data structures, I want to cover some interesting implications first, about sliding window and data stream problems.

We can do the same analysis we did for i, v, and k but for more complex algorithmic information like median median, max max, and sum sum. Below we go over what ϕ values these have, and use that to predict the runtimes of Sliding Window Max, Median, and Sum, which are traditionally pretty difficult to find optimal solutions for.

Here are the predicted ϕ values we deduced using the above theorem for Sliding Window Sum, Sliding Window Max, and Sliding Window Median, and the actual runtimes which demonstrate the predicted ϕ values were correct.

sum window

Obviously finding a sum takes O(n), so the per-element cost we'd predict for Sliding Window Sum is O(1), and ϕ=1.

Indeed, we find all costs are O(1)! Sliding Window Sum is a pretty trivial algorithm.

k 50% window

We can use the fact that k 1's ϕ value is logn to deduce that k 50% also has ϕ=logn, and so does k 50% window.

This is indeed the complexity we get in the hourglass heap solution to Sliding Window Median! It just requires a heap deletion operation in logn time which is easy to do.

max window

Finding the maximum over n elements takes O(n), so obviously the per-element cost is O(1), and ϕ=1.

This is indeed the solution we find with the monotonic queue solution to Sliding Window Max!

O(1) read sum window

O(1) step window, add, remove

O(1) read k 50% window

O(logn) step window, add, remove

O(1) read max window

O(1) step window, add, remove

Data streams are easier versions of the sliding window variants, because they only have an add operation and not a remove operation while Sliding Window problems require both, so the bounds we showed on Sliding Window are stronger.

Substring Problems

There are some interesting implications for substring problems, but it makes sense to start with prefix problems first.

I'll call prefix 1 a data structure that lets you check if a string has a character as a prefix, and prefix many chars where it checks multiple characters a prefix.

Trivially, ϕ=1, since you can check if a string has a character as a prefix in O(1) (the many-prefix case just uses hashing).

prefix 1

Simple Check for Prefix:

O(1) per character

This yields O(m) to slide the window to check the full string.

prefix many chars

Trie:

O(1) per character

This yields O(m) to check any prefix - the Trie solution.

Now that we know ϕ=1 for prefixes, we can also reason ϕ=1 for substrings! A substring is just a "sliding window" check over prefixes.

Therefore substring problems must have O(n) solutions, since they have ϕ=O(1) cost per element! This is not historically trivial at all, and these checks have names: the KMP algorithm, or the Aho-Corasick algorithm for multi-substring checks.

We'll call a data structure that lets you check if a string has a substring substring 1, and multi-substrings substring many.

substring 1

KMP:

O(1) per character

This yields O(n) to check the full string, the KMP result.

substring many

Aho Corasick:

O(1) per character

This yields O(nk) to check the full string! This is the result of Aho Corasick.

Note Aho Corasick is just a Trie with edges to make sliding window work.

Conclusion

This framework gives a way of predicting the optimal runtime of an algorithm without actually writing a solution. For example, if the monotonic queue solution to Sliding Window Max was unexpected when you first saw it, just know that you could have predicted a solution with that time complexity using this framework!

There are two main takeaways.

For any given data structure that stores information info:

You can determine ϕ in two ways:

More Complexity Bounds

- The optimal time it takes to build a data structure is at most O(ϕ⋅tsweep over inputs), although it can be less, like the Heap and heapify.

- Generally Time Complexity ≥ Space Complexity, because you can reuse space, but not time, and all operations take place somewhere in space and time.

Here's an old youtube video focusing on the ordered data structures: youtube.com/watch?v=9_9mRljRu_E

This note was written by Andrew Pareles.